Код:

import requests

from bs4 import BeautifulSoup

import csv

def get_html(url):

try:

r = requests.get(url)

return r.text

except Exception as ex:

print('Ошибка в функции get_html()', ex)

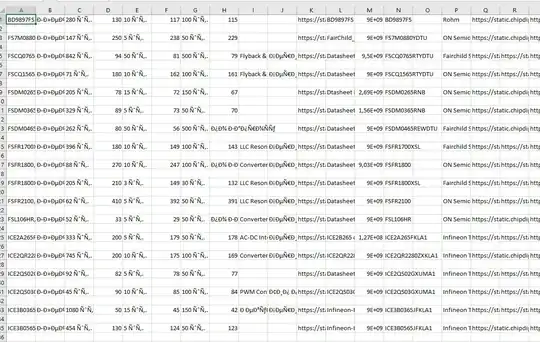

def write_csv(data):

with open('chip_dip.csv', 'a') as f:

writer = csv.writer(f, delimiter=';')

writer.writerow((data['name'],

data['path'],

data['quantity1'],

data['price1'],

data['quantity2'],

data['price2'],

data['quantity3'],

data['price3'],

data['descriptions'],

data['params'],

data['documentation_href'],

data['documentation_description'],

data['item_number'],

data['artikul'],

data['part_number'],

data['brand'],

data['full_jpeg'],

data['small_jpeg'],

data['url']))

def get_data(url, path):

html = get_html(url)

soup = BeautifulSoup(html, 'lxml')

try:

product_name = soup.find('div', class_='main-header').find('h1').text.strip()

except Exception as ex:

product_name = ''

try:

quantity1 = soup.find('div', class_='product__extrainfo-row').find('span').find('b').text.strip()

except Exception as ex:

quantity1 = ''

try:

price1 = soup.find('span', class_='ordering__value').text.strip()

except Exception as ex:

price1 = ''

try:

quantity2 = soup.find('div', class_='ordering__discount nw').find('b').text.strip()

except Exception as ex:

quantity2 = ''

try:

price2 = soup.find('div', class_='ordering__discount nw').find('span', class_='price').text.strip()

except Exception as ex:

price2 = ''

try:

quantity3 = soup.find_all('div', class_='ordering__discount nw')[-1].find('b').text.strip()

except Exception as ex:

quantity3 = ''

try:

price3 = soup.find_all('div', class_='ordering__discount nw')[-1].find('span', class_='price').text.strip()

except Exception as ex:

price3 = ''

try:

descriptions = soup.find('div', class_='showhide item_desc').text.strip()

print(descriptions)

except Exception as ex:

descriptions = ''

try:

params = ''

product_params = soup.find_all('div', class_='showhide')[1].find_all('tr')

for item_params in product_params:

name = item_params.find('td', class_='product__param-name').text.strip()

description = item_params.find('td', class_='product__param-value').text.strip()

param = f'{name} : {description} '

params += param

except Exception as ex:

params = ''

try:

documentation_href = soup.find('div', class_='product__documentation ptext').find('a',

class_='link download__link with-pdfpreview').get(

'href')

except:

documentation_href = ''

try:

documentation_description = soup.find('div', class_='product__documentation ptext').find('a',

class_='link download__link with-pdfpreview').text.strip()

except:

documentation_description = ''

try:

item_numbers = soup.find('div', class_='product_main-ids ptext').find_all('div', class_='product_main-id')

item_number = item_numbers[0].find_all('span')[-1].text.strip()

except:

item_number = ''

try:

artikuls = soup.find('div', class_='product_main-ids ptext').find_all('div', class_='product_main-id')

artikul = artikuls[1].find('span', itemprop='model').text.strip()

except:

artikul = ''

try:

part_numbers = soup.find_all('div', class_='product_main-id')

part_number = part_numbers[2].find('span', itemprop='mpn').text

except:

part_number = ''

try:

brand = soup.find('div', class_='product_main-ids ptext').find('a', itemprop='brand').text.strip()

except:

brand = ''

try:

full_jpeg = soup.find_all('span', class_='galery')[0].find('img').get('src')

except:

full_jpeg = ''

try:

small_jpeg = soup.find_all('span', class_='galery')[1].find('img').get('src')

except:

small_jpeg = ''

data = {'name': product_name,

'path': path,

'quantity1': quantity1,

'price1': price1,

'quantity2': quantity2,

'price2': price2,

'quantity3': quantity3,

'price3': price3,

'descriptions': descriptions,

'params': params,

'documentation_href': documentation_href,

'documentation_description': documentation_description,

'item_number': item_number,

'artikul': artikul,

'part_number': part_number,

'brand': brand,

'full_jpeg': full_jpeg,

'small_jpeg': small_jpeg,

'url': url}

write_csv(data)

def get_block_url(block_name, catalog_header, category_name, item_url):

for page in range(1, 1001):

url = item_url + f'?page={page}'

html = get_html(url)

soup = BeautifulSoup(html, 'lxml')

blocks = soup.find_all('tr', class_='with-hover')

if len(blocks) > 0:

for block in blocks:

product_url = 'https://www.chipdip.ru' + block.find('td', class_='h_name').find('a',

class_='link').get(

'href')

product_avtor = block.find('div', class_='nw').find('span').text.strip()

product_path = f'{block_name}/{catalog_header}/{category_name}/{product_avtor}/'

get_data(product_url, product_path)

else:

break

def get_categories_urls(block_name, url):

html = get_html(url)

soup = BeautifulSoup(html, 'lxml')

items = soup.find_all('div', class_='catalog__g1 clear')

for item in items:

catalog_header = item.find('div', class_='catalog__header').find('a',

class_='link link_dark like-header like-header_3').text.strip()

catalog_items = item.find_all('li', class_='catalog__item')

for catalog_item in catalog_items:

category_url = 'https://www.chipdip.ru' + catalog_item.find('a', class_='link').get('href')

category_name = catalog_item.find('a', class_='link').text.strip()

get_block_url(block_name, catalog_header, category_name, category_url)

def get_content(html):

soup = BeautifulSoup(html, 'lxml')

blocks = soup.find('ul', class_='cat-menu').find_all('li')

for block in blocks:

block_url = 'https://www.chipdip.ru' + block.find('a').get('href')

block_name = block.find('a').text.strip()

get_categories_urls(block_name, block_url)

def main():

url = 'https://www.chipdip.ru'

get_content(get_html(url))

if name == 'main':

main()

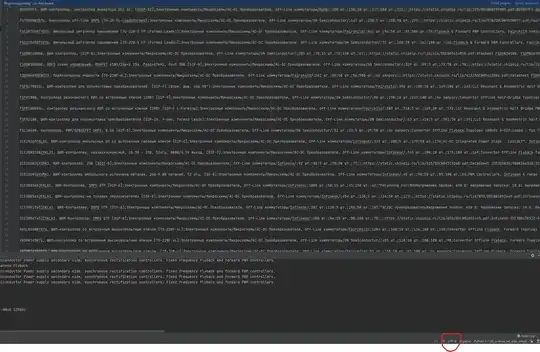

При записи в csv файл выходит ошибка UnicodeEncodeError: 'charmap' codec can't encode character '\x92' in position 935: character maps to . Нашел решение: написать так with open('chip_dip.csv', 'a', encoding="utf-8") as f: Но если так сделать, то ошибка не появляется, но зато искажается текст в csv файле, появляются какие то непонятные символы.Что делать?